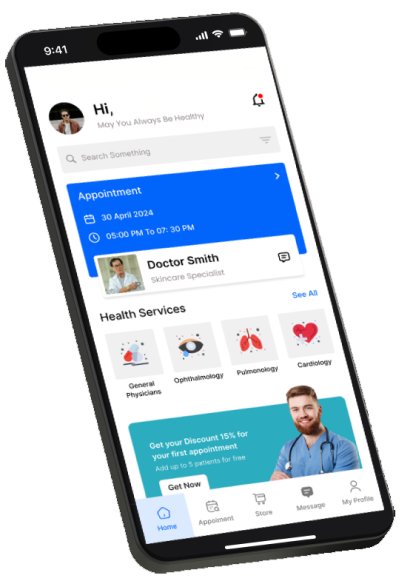

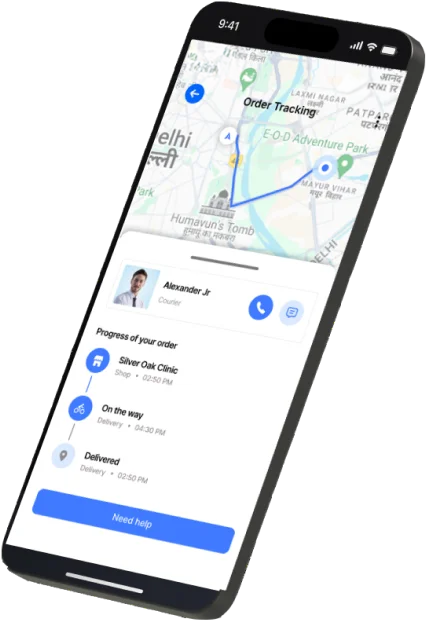

Your Trusted Mobile

App & Web Development Company

See How We’ve Grown

We are a technology-based company with a strong focus, that understands your business needs to provide the best expertise in mobile/web application development. With an expert team, dedicated to delivering excellence our developers will help you to develop Web and Mobile applications for your online business.

The websites and apps we develop will help your business reach and engage the right audience online. Our services support businesses in the journey of building web and app-based software, providing a great user experience. With over 11 years of experience, XenelSoft has earned a reputation for trust in the market for its advanced, premium solutions because our goal is not just to meet your expectations, but to exceed them.

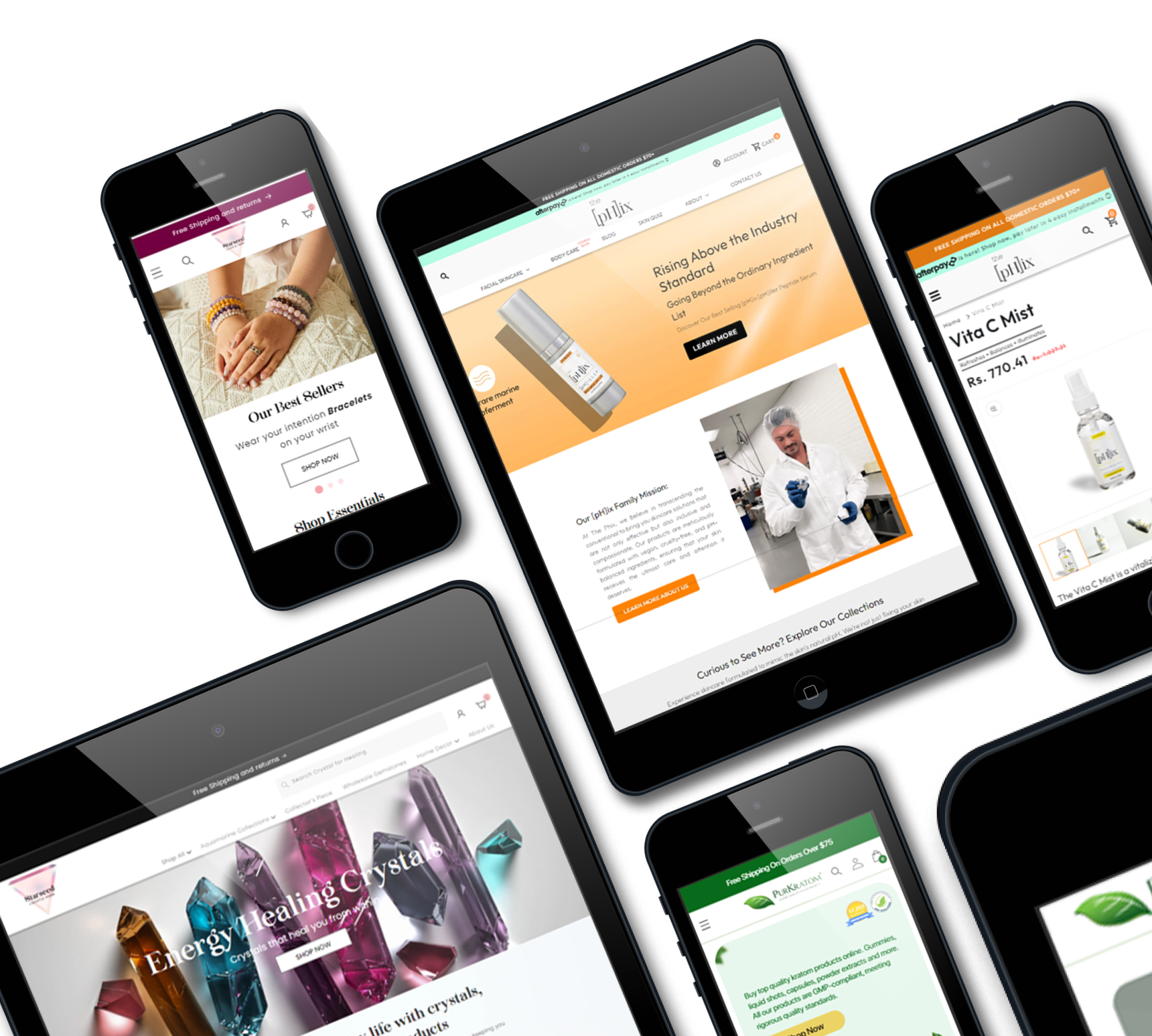

Pick the Right Solution for Your Business

Whether ideating, growing, or optimizing, We’re here to support you with our expert solutions.

Our Expertise

Our goal is to understand your business needs so deeply that our solutions naturally fit, ensuring your success.

Let’s Build Something Amazing Together

Industries We Work With

We're proud to serve industries that keep the world's economy moving. Each industry deserves a custom solution.

Our Achievements

Our Achievements

Speak For

Us

We don’t work for awards, but they remind us we’re on the right path. Dedication, long hours, & a commitment to quality have earned us industry recognition. As an award-winning agency, delivering quality isn’t just a goal—it’s our promise. These awards aren’t just decorations; they are proof of our efforts, using tried-and-tested methods and a collaborative approach to provide innovative ideas and the best solutions for our clients.

Proud

Proud

Moments

One of our notable achievements is being honored by Dainik Jagran for excellence in IT services. This recognition highlights our dedication to helping global clients make smarter tech decisions and achieve growth. It highlights our focus on building smooth processes, delivering impactful customer experiences, and providing quick, effective tech solutions. We continue to work hard to deliver the best in every project we take on.

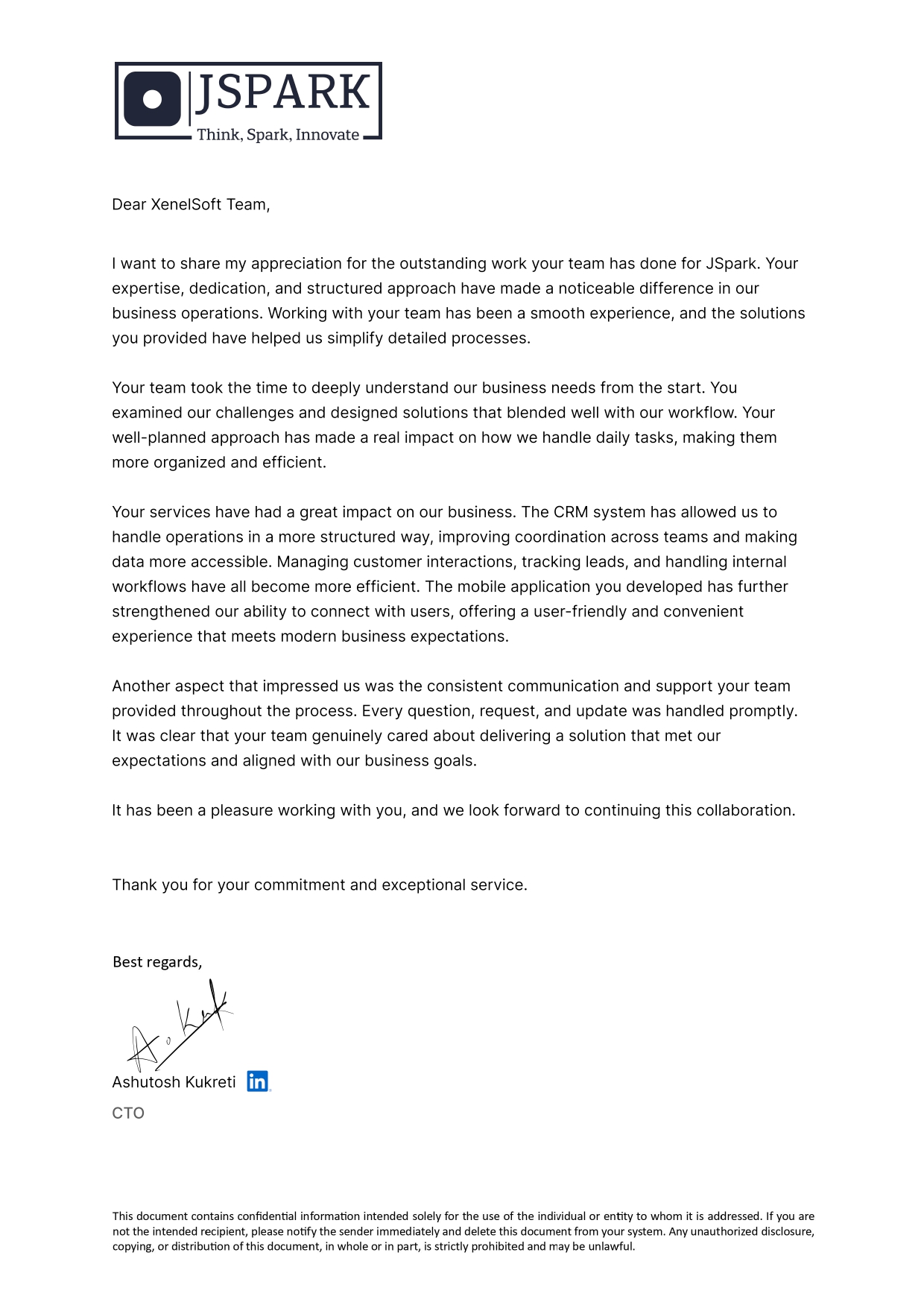

Trusted by Our Clients

Their success is our success—find out what they have to say.

Trusted by

Our Clients

Their success is our success—find out what they have to say.

Brands That Believe in Us

Success starts with our valued partners.

Explore Our Insights

Stay informed with our thoughtful articles and industry insights.

How to Remove Checkout Fields from Woo-commerce Checkout pag...

With the growing demands of eCommerce, businesses increasingly require customized checkout pages designed to their speci...

Mastering Google SEO: The Crucial Role of Indexing and Crawl...

Many SEO’s are now moving back to learn about indexing and crawling of the websites as the evolution of Google SEO Updat...

8 Proven Ways to use Generative AI for Effective Marketing C...

Generative AI is the proven and valuable tool for marketers to market engaging and valuable content on search engines. I...

How Marketers uses Generative AI for Search

Search Generative Experience is the latest update from Google which enables search engines like Google to use Generative...

Frequently Asked Questions

Your Questions, AnsweredFind quick solutions and helpful insights to get all the information you need.

Have More Queries ?

Healthcare | E-commerce | IT & Consulting | Telecommunications | Automobiles | Insurance | Gems & Jewels | Logistics | Social Media | Startups | Ed-Tech | Event Management | Food Delivery | Hospitality | Entertainment | Crypto | Travel & Tourism | Finance | Retail | Real Estate & more!

We provide personalized solutions for businesses of all sizes, whether you're a startup or an established brand.

We work with the latest technologies and frameworks to ensure your project is innovative and future-proof. Some of the technologies we specialize in include:

Mobile: React Native, Flutter, iOS, Ionic, Android, Java, Kotlin, Swift

Web Development: HTML5, CSS3, JavaScript, Angular, React, JQuery, Vue.JS, Bootstrap

Backend: Node.js, PHP, Java, Python, Ruby on Rails, Laravel

CMS: WordPress

Database: MySQL, MongoDB, PostgreSQL

E-Commerce: Shopify, Magento

We’re Just One Click Away

Send us your details, and we’ll offer the support you need.

XenelSoft

Worldwide Presence

As a leading digital solutions company, XenelSoft operates globally, delivering services from multiple locations around the world.

B-79, First floor, Sector-63, Noida-201301, India.

11 Woodlands Close, #03-35 Woodlands 11,Singapore (737853)

70 Manning Road, BS4 1FL, Bristol, United Kingdom

.png)

4380 south service road unit 14 Burlington ON

General Enquiry

Be the First to Know –

Join Us